| (4.6) |

Optimisation is a general term that refers to finding stationary points of a function. Many problems in computational chemistry can be formulated as optimisation of a multidimensional function, NEB optimisation being one of the many. The goal of an optimisation problem can be formulated as follows: find a combination of parameters (independent variables) that optimise a given quantity (the objective function), possibly with some restrictions on the allowed parameter ranges [131]. To know exactly what we are looking for optimality conditions need to be defined. The condition

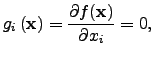

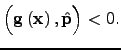

More easily identified optimality conditions could be used instead of Equation 2.6 and Equation 2.7 if ![]() is a function with continuous first and second derivatives, namely, the stationary point and the strong local minimum conditions. A stationary point is a point where the gradient vanishes:

is a function with continuous first and second derivatives, namely, the stationary point and the strong local minimum conditions. A stationary point is a point where the gradient vanishes:

| (4.8) |

![$\displaystyle \left[{\bf H}\left({\bf x}\right)\right]_{ij} = \frac{\partial^2 f\left({\bf x}\right)}{\partial x_i \partial x_j}$](img342.png) | (4.9) |

| (4.10) |

Formally, optimisation of an NEB qualifies as a nonlinear unconstrained continuous multivariable optimisation problem. There are many algorithms available for solving problems of this type that differ in their computational requirements, convergence and other properties. However, NEB optimisation is augmented with an additional difficulty: due to the projections involved the objective function that is being minimised is unknown. Nevertheless, several optimisation techniques were found to be stable in working with NEB. Most of these are based on steepest-descent methods and, consequently, are not very efficient. The L-BFGS and QVV minimisers discussed in this section are based on the deterministic approach for finding local minima.

The basic structure of any local minimiser can be summarised as follows:

The descent direction | (4.11) |

| (4.12) |

The step length ![]() can be determined using either `line search' or `trust radius' approaches. Line search is essentially a one-dimensional minimisation of the function

can be determined using either `line search' or `trust radius' approaches. Line search is essentially a one-dimensional minimisation of the function ![]() , which is performed at every step [131]. In the trust-radius-based approach the step length is adjusted dynamically depending on how well the minimisation algorithm predicts the change in the object function value [9]. There is no clear evidence for the superiority of one method over the other [131]; however, in combination with the limited-memory version of BFGS algorithm the trust ratio approach was found to be more efficient for treating problems with discontinuities such as problems involving periodic boundary conditions with cutoffs [9].

, which is performed at every step [131]. In the trust-radius-based approach the step length is adjusted dynamically depending on how well the minimisation algorithm predicts the change in the object function value [9]. There is no clear evidence for the superiority of one method over the other [131]; however, in combination with the limited-memory version of BFGS algorithm the trust ratio approach was found to be more efficient for treating problems with discontinuities such as problems involving periodic boundary conditions with cutoffs [9].