Stochastic processes are widely used to treat phenomena with random factors and noise. Markov processes are an important class of stochastic processes for which future transitions do not depend upon how the current state was reached. Markov processes restricted to a discrete, finite, or countably infinite state space are called Markov chains [123, 187, 188]. The parameter that is used to number all the states in the state space is called the time parameter. Many interesting problems of chemical kinetics concern the analysis of finite-state samples of otherwise infinite state space [9].

When analysing the kinetic databases obtained from discrete path sampling (DPS) studies [8] it can be difficult to extract the phenomenological rate constants for processes that occur over very long time scales [9]. DPS databases are composed of local minima of the potential energy surface (PES) and the transition states that connect them. While minima correspond to mechanically stable structures, the transition states specify how these structures interconvert and the corresponding rates. Whenever the potential energy barrier for the event of interest is large in comparison with the event becomes rare, where is the temperature and is Boltzmann’s constant.

The most important tools previously employed to extract kinetic information from a DPS stationary point database are the master equation [189], kinetic Monte Carlo [190, 191] (KMC) and matrix multiplication (MM) methods [8]. The system of linear master equations in its matrix formulation can be solved numerically to yield the time evolution of the occupation probabilities starting from an arbitrary initial distribution. This approach works well only for small problems, as the diagonalisation of the transition matrix, , scales as the cube of the number of states [9]. In addition, numerical problems arise when the magnitude of the eigenvalues corresponding to the slowest relaxation modes approaches the precision of the zero eigenvalue corresponding to equilibrium [192]. The KMC approach is a stochastic technique that is commonly used to simulate the dynamics of various physical and chemical systems, examples being formation of crystal structures [193], nanoparticle growth [194] and diffusion [195]. The MM approach provides a way to sum contributions to phenomenological two-state rate constants from pathways that contain progressively more steps. It is based upon a steady-state approximation, and provides the corresponding solution to the linear master equation [189, 196]. The MM approach has been used to analyse DPS databases in a number of systems ranging from Lennard-Jones clusters [8, 10] to biomolecules [133, 197].

Both the standard KMC and MM formulations provide rates at a computational cost that generally grows exponentially as the temperature is decreased. In this chapter we describe alternative methods that are deterministic and formally exact, where the computational requirements are independent of the temperature and the time scale on which the process of interest takes place.

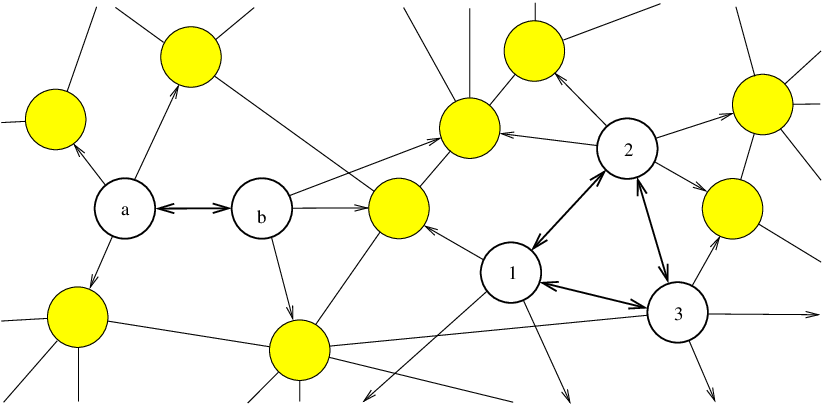

In general, to fully define a Markov chain it is necessary to specify all the possible states of the system and the rules for transitions between them. Graph theoretical representations of finite-state Markov chains are widely used [187, 198–200]. Here we adopt a digraph [154, 201] representation of a Markov chain, where nodes represent the states and edges represent the transitions of non-zero probability. The edge describes a transition from node to node and has a probability associated with it, which is commonly known as a routing or branching probability. A node can be connected to any number of other nodes. Two nodes of a graph are adjacent if there is an edge between them [202].

For digraphs all connections of a node are classified as incoming or outgoing. The total number of incoming connections is the in-degree of a node, while the total number of outgoing connections is the out-degree. In a symmetric digraph the in-degree and out-degree are the same for every node [154]. is the set of indices of all nodes that are connected to node via incoming edges that finish at node . Similarly, is the set of the indices of all the nodes that are connected to node via outgoing edges from node . The degree of a graph is the maximum degree of all of its nodes. The expectation value for the degree of an undirected graph is the average number of connections per node.

For any node the transition probabilities add up to unity,

| (4.1) |

where the sum is over all . Unless specified otherwise all sums are taken over the set of indices of adjacent nodes or, since the branching probability is zero for non-adjacent nodes, over the set of all the nodes.

In a computer program dense graphs are usually stored in the form of adjacency matrices [154]. For sparse graphs [201] a more compact but less efficient adjacency-lists-based data structure exists [154]. To store a graph representation of a Markov chain, in addition to connectivity information (available from the adjacency matrix), the branching probabilities must be stored. Hence for dense graphs the most convenient approach is to store a transition probability matrix [187] with transition probabilities for non-existent edges set to zero. For sparse graphs, both the adjacency list and a list of corresponding branching probabilities must be stored.

The KMC method can be used to generate a memoryless (Markovian) random walk and hence a set of trajectories connecting initial and final states in a DPS database. Many trajectories are necessary to collect appropriate statistics. Examples of pathway averages that are usually obtained with KMC are the mean path length and the mean first passage time. Here the KMC trajectory length is the number of states (local minima of the PES in the current context) that the walker encounters before reaching the final state. The first passage time is defined as the time that elapses before the walker reaches the final state. For a given KMC trajectory the first passage time is calculated as the sum of the mean waiting times in each of the states encountered.

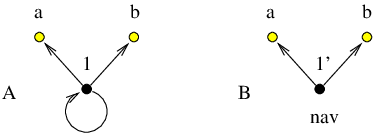

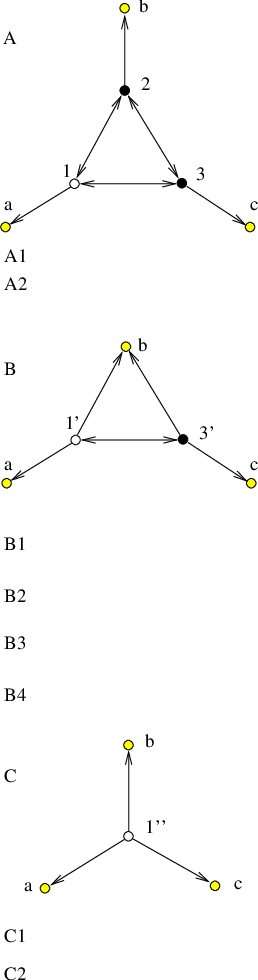

Within the canonical Metropolis Monte Carlo approach a step is always taken if the proposed move lowers the energy, while steps that raise the energy are allowed with a probability that decreases with the energy difference between the current and proposed states [32]. An efficient way to propagate KMC trajectories was suggested by Bortz, Kalos, and Lebowitz (BKL) [190]. According to the BKL algorithm, a step is chosen in such a way that the ratios between transition probabilities of different events are preserved, but rejections are eliminated. Figure 4.1 explains this approach for a simple discrete-time Markov chain. The evolution of an ordinary KMC trajectory is monitored by the ‘time’ parameter , , which is incremented by one every time a transition from any state is made. The random walker is in state at time . The KMC trajectory is terminated whenever an absorbing state is encountered. As approaches unity transitions out of state become rare. To ensure that every time a random number is generated (one of the most time consuming steps in a KMC calculation) a move is made to a neighbouring state we average over the transitions from state to itself to obtain the Markov chain depicted in Figure 4.1 (b).

Transitions from state to itself can be modelled by a Bernoulli process [33] with the probability of success equal to . The average time for escape from state is obtained as

| (4.2) |

which can be used as a measure of the efficiency of trapping [203]. Transition probabilities out of state are renormalised:

| (4.3) |

Similar ideas underlie the accelerated Monte Carlo algorithm suggested by Novotny [26]. According to this ‘Monte Carlo with absorbing Markov chains’ (MCAMC) method, at every step a Markov matrix, , is formed, which describes the transitions in a subspace that contains the current state , and a set of adjacent states that the random walker is likely to visit from . A trajectory length, , for escape from is obtained by bracketing a uniformly distributed random variable, , as

| (4.4) |

Then an -step leapfrog move is performed to one of the states and the simulation clock is incremented by . State is chosen at random with probability

| (4.5) |

where is the transition probability from state to state . Both the BKL and MCAMC methods can be many orders of magnitude faster than the standard KMC method when kinetic traps are present.

In chemical kinetics transitions out of a state are described using a Poisson process, which can be considered a continuous-time analogue of Bernoulli trials. The transition probabilities are determined from the rates of the underlying transitions as

| (4.6) |

There may be several escape routes from a given state. Transitions from any state to directly connected states are treated as competing independent Poisson processes, which together generate a new Poisson distribution [179]. independent Poisson processes with rates combine to produce a Poisson process with rate . The waiting time for a transition to occur to any connected state is then exponentially distributed as [204].

Given the exponential distribution of waiting times the mean waiting time in state before escape, , is and the variance of the waiting time is simply . Here is the rate constant for transitions from to . When the average of the distribution of times is the property of interest, and not the distribution of times itself, it is sufficient to increment the simulation time by the mean waiting time rather than by a value drawn from the appropriate distribution [9, 205]. This modification to the original KMC formulation [206, 207] reduces the cost of the method and accelerates the convergence of KMC averages without affecting the results.

The result of a DPS simulation is a database of local minima and transition states from the PES [8–10]. To extract thermodynamic and kinetic properties from this database we require partition functions for the individual minima and rate constants, , for the elementary transitions between adjacent minima and . We usually employ harmonic densities of states and statistical rate theory to obtain these quantities, but these details are not important here. To analyse the global kinetics we further assume Markovian transitions between adjacent local minima, which produces a set of linear (master) equations that governs the evolution of the occupation probabilities towards equilibrium [189, 196]

| (4.7) |

where is the occupation probability of minimum at time .

All the minima are classified into sets , and . When local equilibrium is assumed within the and sets we can write

| (4.8) |

where and . If the steady-state approximation is applied to all the intervening states , so that

| (4.9) |

then Equation 4.7 can be written as [9]

| (4.10) |

The rate constants and for forward and backward transitions between states and are the sums over all possible paths within the set of intervening minima of the products of the branching probabilities corresponding to the elementary transitions for each path:

| (4.11) |

and similarly for [8]. The sum is over all paths that begin from a state and end at a state , and the prime indicates that paths are not allowed to revisit states in . In previous contributions [8, 10, 133, 197] this sum was evaluated using a weighted adjacency matrix multiplication (MM) method, which will be reviewed in Section 4.2.

We now show that the evaluation of the DPS sum in Equation 4.11 and the calculation of KMC averages are two closely related problems.

For KMC simulations we define sources and sinks that coincide with the set of initial states and final states , respectively.∗ Every cycle of KMC simulation involves the generation of a single KMC trajectory connecting a node and a node . A source node is chosen from set with probability .

We can formulate the calculation of the mean first passage time from to in graph theoretical terms as follows. Let the digraph consisting of nodes for all local minima and edges for each transition state be . The digraph consisting of all nodes except those belonging to region is denoted by . We assume that there are no isolated nodes in , so that all the nodes in can be reached from every node in . Suppose we start a KMC simulation from a particular node . Let be the expected occupation probability of node after KMC steps, with initial conditions and . We further define an escape probability for each as the sum of branching probabilities to nodes in , i.e.

| (4.12) |

KMC trajectories terminate when they arrive at an minimum, and the expected probability transfer to the region at the th KMC step is . If there is at least one escape route from to with a non-zero branching probability, then eventually all the occupation probabilities in must tend to zero and

| (4.13) |

We now rewrite as a sum over all -step paths that start from and end at without leaving . Each path contributes to according to the appropriate product of branching probabilities, so that

| (4.14) |

where denotes the set of -step paths that start from and end at without leaving , and the last line defines the pathway sum .

It is clear from the last line of Equation 4.14 that for fixed the quantities define a probability distribution. However, the pathway sums are not probabilities, and may be greater than unity. In particular, because the path of zero length is included, which contributes one to the sum. Furthermore, the normalisation condition on the last line of Equation 4.14 places no restriction on terms for which vanishes.

We can also define a probability distribution for individual pathways. Let be the product of branching probabilities associated with a path so that

| (4.15) |

where is the set of all appropriate paths from to of any length that can visit and revisit any node in . If we focus upon paths starting from minima in region

| (4.16) |

where is the set of nodes in that are adjacent to minima in the complete graph , since vanishes for all other nodes. We can rewrite this sum as

| (4.17) |

which defines the non-zero pathway probabilities for all paths that start from any node in set and finish at any node in set . The paths can revisit any minima in the set, but include just one minimum at the terminus. Note that and can be used interchangeably if there is only one state in set .

The average of some property, , defined for each KMC trajectory, , can be calculated from the as

| (4.18) |

Of course, KMC simulations avoid this complete enumeration by generating trajectories with probabilities proportional to , so that a simple running average can be used to calculate . In the following sections we will develop alternative approaches based upon evaluating the complete sum, which become increasingly efficient at low temperature. We emphasise that these methods are only applicable to problems with a finite number of states, which are assumed to be known in advance.

The evaluation of the DPS sum defined in Equation 4.11 can also be rewritten in terms of pathway probabilities:

| (4.19) |

where the prime on the summation indicates that the paths are not allowed to revisit the region. We have also used the fact that .

A digraph representation of the restricted set of pathways defined in Equation 4.19 can be created if we allow sets of sources and sinks to overlap. In that case all the nodes are defined to be sinks and all the nodes in are the sources, i.e. every node in set is both a source and a sink. The required sum then includes all the pathways that finish at sinks of type , but not those that finish at sinks of type . The case when sets of sources and sinks (partially) overlap is discussed in detail in Section 4.6.

Since the mean first passage time between states and , or the escape time from a subgraph, is of particular interest, we first illustrate a means to derive formulae for these quantities in terms of pathway probabilities.

The average time taken to traverse a path is calculated as , where is the mean waiting time for escape from node , as above, identifies the th node along path , and is the length of path . The mean escape time from a graph if started from node is then

| (4.20) |

If we multiply every branching probability, , that appears in by then the mean escape time can be obtained as:

| (4.21) |

This approach is useful if we have analytic results for the total probability , which may then be manipulated into formulae for , and is standard practice in probability theory literature [208, 209]. The quantity is similar to the ‘ probability’ described in Reference [208]. Analogous techniques are usually employed to obtain and higher moments of the first-passage time distribution from analytic expressions for the first-passage probability generating function (see, for example, References [210, 211]). We now define and the related quantities

| (4.22) |

Note that etc., while the mean escape time can now be written as

| (4.23) |

In the remaining sections we show how to calculate the pathway probabilities, , exactly, along with pathway averages, such as the waiting time. Chain graphs are treated in Section 4.2 and the results are generalised to arbitrary graphs in Section 4.3.

A general account of the problem of the first passage time in chemical physics was given by Weiss as early as 1965 [212]. In Reference [212] he summarised various techniques for solving such problems to date, and gave a general formula for moments of the first passage time in terms of the Green’s function of the Fokker-Plank operator. Explicit expressions for the mean first passage times in terms of the basic transition probabilities for the case of a one-dimensional random walk were obtained by Ledermann and Reuter in 1954 [213], Karlin and MacGregor in 1957 [214], Weiss in 1965 [212], Gardiner in 1985 [215], Van den Broeck in 1988 [216], Le Doussal in 1989 [217], Murthy and Kehr and Matan and Havlin in 1989-1990 [211, 218, 219], Raykin in 1992 [210], Bar-Haim and Klafter in 1998 [203], Pury and Cáceres in 2003 [220], and Slutsky, Kardar and Mirny in 2004 [221, 222]. The one-dimensional random walk is therefore a very well researched topic, both in the field of probability and its physical and chemical applications. The results presented in this section differ in the manner of presentation.

A random walk in a discrete state space , where all the states can be arranged on a linear chain in such a way that for all , is called a one-dimensional or simple random walk (SRW). The SRW model attracts a lot of attention because of its rich behaviour and mathematical tractability. A well known example of its complexity is the anomalous diffusion law discovered by Sinai [223]. He showed that there is a dramatic slowing down of an ordinary power law diffusion (RMS displacement is proportional to instead of ) if a random walker at each site experiences a random bias field . Stanley and Havlin generalised the Sinai model by introducing long-range correlations between the bias fields on each site and showed that the SRW can span a range of diffusive properties [224].

Although one-dimensional transport is rarely found on the macroscopic scale at a microscopic level there are several examples, such as kinesin motion along microtubules [225, 226], or DNA translocation through a nanopore [227, 228], so the SRW is interesting not only from a theoretical standpoint. There is a number of models that build upon the SRW that have exciting applications, examples being the SRW walk with branching and annihilation [229], and the SRW in the presence of random trappings [230]. Techniques developed for the SRW were applied to study more complex cases, such as, for example, multistage transport in liquids [231], random walks on fractals [232, 233], even-visiting random walks [234], self-avoiding random walks [235], random walks on percolation clusters [236, 237], and random walks on simple regular lattices [238, 239] and superlattices [240].

A presentation that discusses SRW first-passage probabilities in detail sufficient for our applications is that of Raykin [210]. He considered pathway ensembles explicitly and obtained the generating functions for the occupation probabilities of any lattice site for infinite, half-infinite and finite one-dimensional lattices with the random walker starting from an arbitrary lattice site. As we discuss below, these results have a direct application to the evaluation of the DPS rate constants augmented with recrossings. We have derived equivalent expressions for the first-passage probabilities independently, considering the finite rather than the infinite case, which we discuss in terms of chain digraphs below.

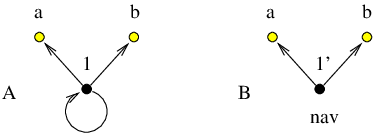

We define a chain as a digraph with nodes and edges, where and . Adjacent nodes in the numbering scheme are connected via two oppositely directed edges, and these are the only connections. A transition probability is associated with every edge , as described in Section 4.1.1. An -node chain is depicted in Figure 4.2 as a subgraph of another graph.

The total probability of escape from the chain via node if started from node is of interest because it has previously been used to associate contributions to the total rate constant from unique paths in DPS studies [8, 9]. We can restrict the sampling to paths without recrossings between intermediate minima if we perform the corresponding recrossing sums explicitly [8].

We denote a pathway in by the ordered sequence of node indices. The length of a pathway is the number of edges traversed. For example, the pathway has length , starts at node and finishes at node . The indices of the intermediate nodes are given in the order in which they are visited. The product of branching probabilities associated with all edges in a path was defined above as . For example, the product for the above pathway is , which we can abbreviate as . For a chain graph , which is a subgraph of , we also define the set of indices of nodes in that are adjacent to nodes in but not members of as . These nodes will be considered as sinks if we are interested in escape from .

Analytical results for are easily derived:

| (4.24) |

These sums converge if the cardinality of the set is greater than zero. To prove this result consider a factor, , of the form

| (4.25) |

and assume that the branching probabilities are all non-zero, and that there is at least one additional escape route from , or . We know that because and . Hence . However, , and equality is only possible if , which contradicts the assumption of an additional escape route. Hence and . The pathway sums , , and can be obtained from Equation 4.24 by permuting the indices. The last two sums in Equation 4.24 are particularly instructive: the ’th term in the sum for and the ’th term in the sum for are the contributions from pathways of length and , respectively.

The pathway sums can be derived for a general chain graph in terms of recursion relations, as shown in Appendix C. The validity of our results for was verified numerically using the matrix multiplication method described in Reference [8]. For a chain of length we construct an transition probability matrix with elements

| (4.26) |

The matrix form of the system of Chapman-Kolmogorov equations [187] for homogeneous discrete-time Markov chains [123, 187] allows us to obtain the -step transition probability matrix recursively as

| (4.27) |

can then be computed as

| (4.28) |

where the number of matrix multiplications, , is adjusted dynamically depending on the convergence properties of the above sum. We note that sink nodes are excluded when constructing and can be less than unity.

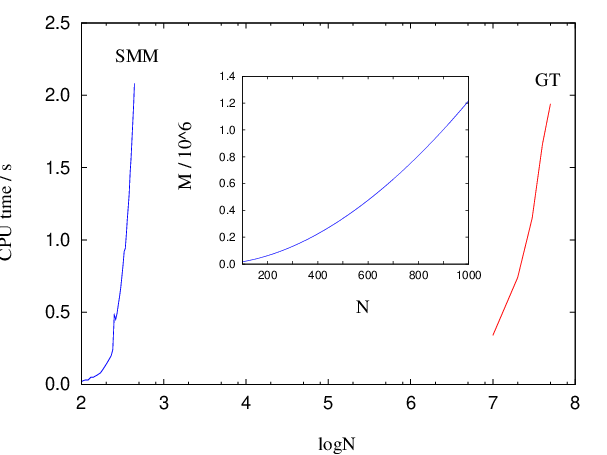

For chains a sparse-optimised matrix multiplication method for scales as , and may suffer from convergence and numerical precision problems for larger values of and branching probabilities that are close to zero or unity [8]. The summation method presented in this section can be implemented to scale as with constant memory requirements (Algorithm B.1). It therefore constitutes a faster, more robust and more precise alternative to the matrix multiplication method when applied to chain graph topologies (Figure 4.3).

Mean escape times for are readily obtained from the results in Equation 4.24 by applying the method outlined in Section 4.1.5:

| (4.29) |

and can be obtained from by permuting the subscripts 1 and 3.

The mean escape time from the graph if started from node can be calculated recursively using the results of Appendix D and Section 4.1.5 or by resorting to a first-step analysis [241].

In a complete digraph each pair of nodes is connected by two oppositely directed edges [155]. The complete graph with graph nodes is denoted , and has nodes and edges, remembering that we have two edges per connection (Figure 4.4).

Due to the complete connectivity we need only consider two cases: when the starting and finishing nodes are the same and when they are distinct. We employ complete graphs for the purposes of generality. An arbitrary graph is a subgraph of with transition probabilities for non-existent edges set to zero. All the results in this section are therefore equally applicable to arbitrary graphs.

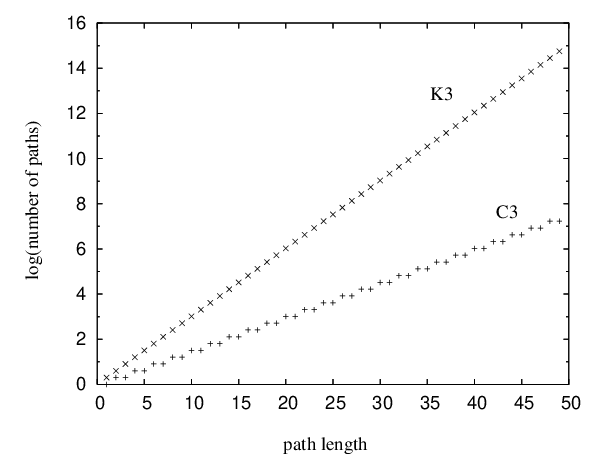

The complete graph will not be considered here as it is topologically identical to the graph . The difference between the and graphs is the existence of edges that connect nodes and . Pathways confined to can therefore contain cycles, and for a given path length they are significantly more numerous (Figure 4.5).

The can again be derived analytically for this graph:

| (4.30) |

The results for any other possibility can be obtained by permuting the node indices appropriately.

The pathway sums for larger complete graphs can be obtained by recursion. For any path leaving from and returning to can be broken down into a step out of to any , all possible paths between and within , and finally a step back to from . All such paths can be combined together in any order, so we have a multinomial distribution [242]:

| (4.31) |

To evaluate we break down the sum into all paths that depart from and return to , followed by all paths that leave node and reach node 1 without returning to . The first contribution corresponds to a factor of , and the second produces a factor for every :

| (4.32) |

where is defined to be unity. Any other can be obtained by a permutation of node labels.

Algorithm B.2 provides an example implementation of the above formulae optimised for incomplete graphs. The running time of Algorithm B.2 depends strongly on the graph density. (A digraph in which the number of edges is close to the maximum value of is termed a dense digraph [202].) For the algorithm runs in time, while for an arbitrary graph it scales as , where is the average degree of the nodes. For chain graphs the algorithm runs in time and therefore constitutes a recursive-function-based alternative to Algorithm B.1 with linear memory requirements. For complete graphs an alternative implementation with scaling is also possible.

Although the scaling of the above algorithm with may appear disastrous, it does in fact run faster than standard KMC and MM approaches for graphs where the escape probabilities are several orders of magnitude smaller than the transition probabilities (Algorithm B.2). Otherwise, for anything but moderately branched chain graphs, Algorithm B.2 is significantly more expensive. However, the graph-transformation-based method presented in Section 4.4 yields both the pathway sums and the mean escape times for a complete graph in time, and is the fastest approach that we have found.

Mean escape times for are readily obtained from the results in Equation 4.30 by applying the method outlined in Section 4.1.5:

| (4.33) |

We have verified this result analytically using first-step analysis and numerically for various values of the parameters and . and obtained quantitative agreement (see Figure 4.6).

Figure 4.7 demonstrates how the advantage of exact summation over KMC and MM becomes more pronounced as the escape probabilities become smaller.

The problem of calculation of the properties of a random walk on irregular networks was addressed previously by Goldhirsch and Gefen [208, 209]. They described a generating-function-based method where an ensemble of pathways is partitioned into ‘basic walks’. A walk was defined as a set of paths that satisfies a certain restriction. As the probability generating functions corresponding to these basic walks multiply, the properties of a network as a whole can be inferred given knowledge of the generating functions corresponding to these basic walks. The method was applied to a chain, a loopless regularly branched chain and a chain containing a single loop. To the best of our knowledge only one [243] out of the 30 papers [209–211, 219–222, 240, 243–264] that cite the work of Goldhirsch and Gefen [208] is an application, perhaps due to the complexity of the method.

Here we present a graph transformation (GT) approach for calculating the pathway sums and the mean escape times for . In general, it is applicable to arbitrary digraphs, but the best performance is achieved when the graph in question is dense. The algorithm discussed in this section will be referred to as DGT (D for dense). A sparse-optimised version of the GT method (SGT) will be discussed in Section 4.5.

The GT approach is similar in spirit to the ideas that lie behind the mean value analysis and aggregation/disaggregation techniques commonly used in the performance and reliability evaluation of queueing networks [187, 265–267]. It is also loosely related to dynamic graph algorithms [268–271], which are used when a property is calculated on a graph subject to dynamic changes, such as deletions and insertions of nodes and edges. The main idea is to progressively remove nodes from a graph whilst leaving the average properties of interest unchanged. For example, suppose we wish to remove node from graph to obtain a new graph . Here we assume that is neither source nor sink. Before node can be removed the property of interest is averaged over all the pathways that include the edges between nodes and . The averaging is performed separately for every node . Next, we will use the waiting time as an example of such a property and show that the mean first passage time in the original and transformed graphs is the same.

The theory is an extension of the results used to perform jumps to second neighbours in previous KMC simulations [8, 272]. Consider KMC trajectories that arrive at node , which is adjacent to . We wish to step directly from to any node in the set of nodes that are adjacent to or , excluding these two nodes themselves. To ensure that the mean first-passage times from sources to sinks calculated in and are the same we must define new branching probabilities, from to all , along with a new waiting time for escape from , . Here, corresponds to the mean waiting time for escape from to any , while the modified branching probabilities subsume all the possible recrossings involving node that could occur in before a transition to a node in . Hence the new branching probabilities are:

| (4.34) |

This formula can also be applied if either or vanishes.

It is easy to show that the new branching probabilities are normalised:

| (4.35) |

To calculate we use the method of Section 4.1.4:

| (4.36) |

The modified branching probabilities and waiting times could be used in a KMC simulation based upon graph . Here we continue to use the notation of Section 4.1.4, where sinks correspond to nodes and sources to nodes in , and contains all the nodes in expect for the set, as before. Since the modified branching probabilities, , in subsume the sums over all path paths from to that involve we would expect the sink probability, , of a trajectory starting at ending at sink , to be conserved. However, since each trajectory exiting from acquires a time increment equal to the average value, , the mean first-passage times to individual sinks, , are not conserved in (unless there is a single sink). Nevertheless, the overall mean first-passage time to is conserved, i.e. . To prove these results more formally within the framework of complete sums consider the effect of removing node on trajectories reaching node from a source node . The sink probability for a particular sink is

| (4.37) |

and similarly for any other node adjacent to . Hence the transformation preserves the individual sink probabilities for any source.

Now consider the effect of removing node on the mean first-passage time from source to sink , , using the approach of Section 4.1.4.

| (4.38) |

where the tildes indicate that every branching probability is replaced by , as above. The first and last terms are unchanged from graph in this construction, but the middle term,

| (4.39) |

is different (unless there is only one sink). However, if we sum over sinks then

| (4.40) |

for all , and we can now simplify the sum over as

| (4.41) |

The same argument can be applied whenever a trajectory reaches a node adjacent to , so that , as required.

The above procedure extends the BKL approach [190] to exclude not only the transitions from the current state into itself but also transitions involving an adjacent node . Alternatively, this method could be viewed as a coarse-graining of the Markov chain. Such coarse-graining is acceptable if the property of interest is the average of the distribution of times rather than the distribution of times itself. In our simulations the average is the only property of interest. In cases when the distribution itself is sought, the approach described here may still be useful and could be the first step in the analysis of the distribution of escape times, as it yields the exact average of the distribution.

The transformation is illustrated in Figure 4.8 for the case of a single source.

Figure 4.8 (a) displays the original graph and its parametrisation. During the first iteration of the algorithm node is removed to yield the graph depicted in Figure 4.8 (b). This change reduces the dimensionality of the original graph, as the new graph contains one node and three edges fewer. The result of the second, and final, iteration of the algorithm is a graph that contains source and sink nodes only, with the correct transition probabilities and mean waiting time [Figure 4.8 (c)].

We now describe algorithms to implement the above approach and calculate mean escape times from complete graphs with multiple sources and sinks. Listings for some of the algorithms discussed here are given in Appendix B. We follow the notation of Section 4.1.4 and consider a digraph consisting of source nodes, sink nodes, and intervening nodes. therefore contains the subgraph .

The result of the transformation of a graph with a single source and sinks using Algorithm B.3 is the mean escape time and pathway probabilities , . Solving a problem with sources is equivalent to solving single source problems. For example, if there are two sources and we first solve a problem where only node is set to be the source to obtain and the pathway sums from to every sink node . The same procedure is then followed for .

The form of the transition probability matrix is illustrated below at three stages: first for the original graph, then at the stage when all the intervening nodes have been removed (line 16 in Algorithm B.3), and finally at the end of the procedure:

| (4.42) |

Each matrix is split into blocks that specify the transitions between the nodes of a particular type, as labelled. Upon termination, every element in the top right block of matrix is non-zero.

Algorithm B.3 uses the adjacency matrix representation of graph , for which the average of the distribution of mean first passage times is to be obtained. For efficiency, when constructing the transition probability matrix we order the nodes with the sink nodes first and the source nodes last. Algorithm B.3 is composed of two parts. The first part (lines 1-16) iteratively removes all the intermediate nodes from graph to yield a graph that is composed of sink nodes and source nodes only. The second part (lines 17-34) disconnects the source nodes from each other to produce a graph with nodes and directed edges connecting each source with every sink. Lines 13-15 are not required for obtaining the correct answer, but the final transition probability matrix looks neater.

The computational complexity of lines 1-16 of Algorithm B.3 is . The second part of Algorithm B.3 (lines 17-34) scales as . The total complexity for the case of a single source and for the case when there are no intermediate nodes is and , respectively. The storage requirements of Algorithm B.3 scale as .

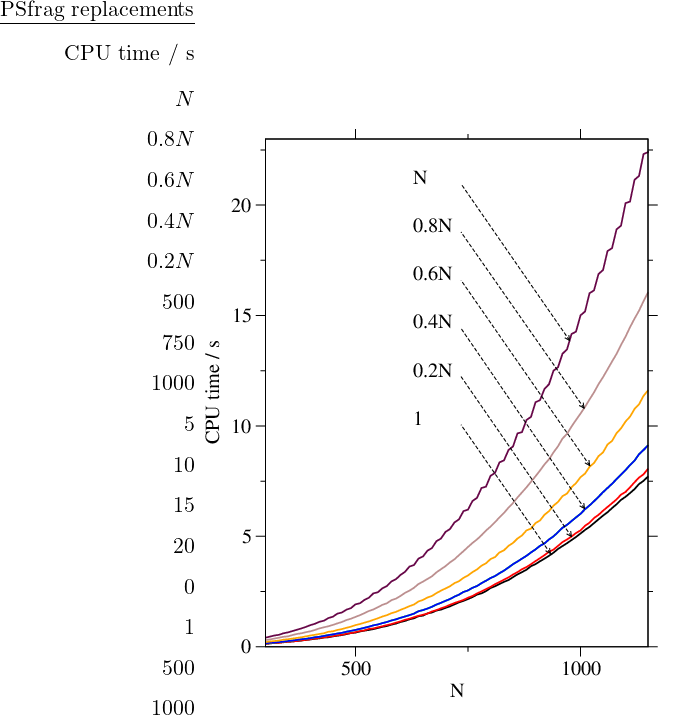

We have implemented the algorithm in Fortran 95 and timed it for complete graphs of different sizes. The results presented in Figure 4.9 confirm the overall cubic scaling. The program is GPL-licensed [273] and available online [274]. These and other benchmarks presented in this chapter were obtained for a single Intel Pentium 4 3.00GHz 512Kb cache processor running under the Debian GNU/Linux operating system [275]. The code was compiled and optimised using the Intel Fortran compiler for Linux.

Algorithm B.3 could easily be adapted to use adjacency-lists-based data structures [154], resulting in a faster execution and lower storage requirements for sparse graphs. We have implemented [274] a sparse-optimised version of Algorithm B.3 because the graph representations of the Markov chains of interest in the present work are sparse [201].

The algorithm for detaching a single intermediate node from an arbitrary graph stored in a sparse-optimised format is given in Algorithm B.4. Having chosen the node to be removed, , all the neighbours are analysed in turn, as follows. Lines 3-9 of Algorithm B.4 find node in the adjacency list of node . If is not a sink, lines 11-34 are executed to modify the adjacency list of node : lines 13-14 delete node from the adjacency list of , while lines 15-30 make all the neighbours of node the neighbours of . The symbol denotes the union minus the intersection of two sets, otherwise known as the symmetric difference. If the edge already existed only the branching probability is changed (line 21). Otherwise, a new edge is created and the adjacency and branching probability lists are modified accordingly (line 26 and line 27, respectively). Finally, the branching probabilities of node are renormalised (lines 31-33) and the waiting time for node is increased (line 34).

Algorithm B.4 is invoked iteratively for every node that is neither a source nor a sink to yield a graph that is composed of source nodes and sink nodes only. Then the procedure described in Section 4.4 for disconnection of source nodes (lines 17-34 of Algorithm B.3) is applied to obtain the mean escape times for every source node. The sparse-optimised version of the second part of Algorithm B.3 is straightforward and is therefore omitted here for brevity.

The running time of Algorithm B.4 is , where is the degree of node . For the case when all the nodes in a graph have approximately the same degree, , the complexity is . Therefore, if there are intermediate nodes to be detached and is of the same order of magnitude as , the cost of detaching nodes is . The asymptotic bound is worse than that of Algorithm B.3 because of the searches through adjacency lists (lines 3-9 and lines 19-24). If is sufficiently small the algorithm based on adjacency lists is faster.

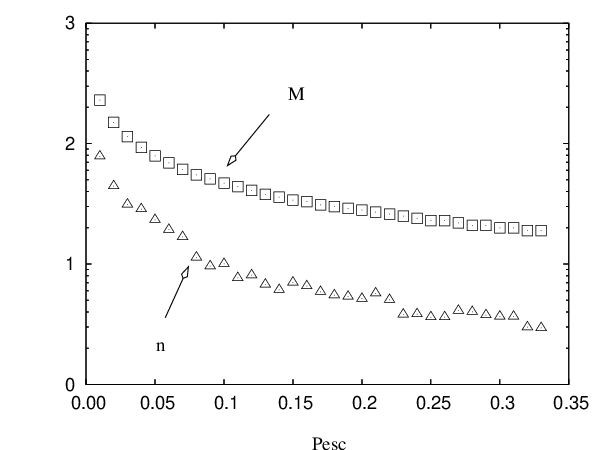

After each invocation of Algorithm B.4 the number of nodes is always decreased by one. The number of edges, however, can increase or decrease depending on the in- and out-degree of the node to be removed and the connectivity of its neighbours. If node is not directly connected to any of the sinks, and the neighbours of node are not connected to each other directly, the total number of edges is increased by . Therefore, the number of edges decreases (by ) only when , and the number of edges does not change if the degree is . For the number of edges increases by an amount that grows quadratically with . The actual increase depends on how many connections already existed between the neighbours of .

The order in which the intermediate nodes are detached does not change the final result and is unimportant if the graph is complete. For sparse graphs, however, the order can affect the running time significantly. If the degree distribution for successive graphs is sharp with the same average, , then the order in which the nodes are removed does not affect the complexity, which is . If the distributions are broad it is helpful to remove the nodes with smaller degrees first. A Fibonacci heap min-priority queue [276] was successfully used to achieve this result. The overhead for maintaining a heap is increase-key operations (of each) per execution of Algorithm B.4. Fortran and Python implementations of Algorithm B.4 algorithm are available online [274].

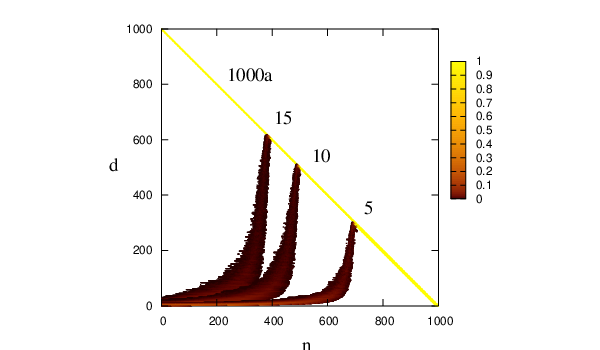

Random graphs provide an ideal testbed for the GT algorithm by providing control over the graph density. A random graph, , is obtained by starting with a set of nodes and adding edges between them at random [33]. In this work we used a random graph model where each edge is chosen independently with probability , where is the target value for the average degree.

The complexity for removal of nodes can then be expressed as

| (4.43) |

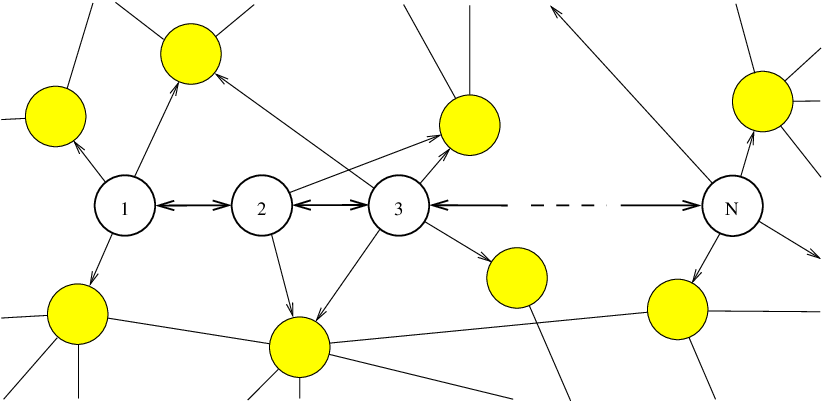

where is the degree of the node, , removed at iteration , is its adjacency list, and is the degree of the th neighbour of that node at iteration . The computational cost given in Equation 4.43 is difficult to express in terms of the parameters of the original graph, as the cost of every cycle depends on the distribution of degrees, the evolution of which, in turn, is dependent on the connectivity of the original graph in a non-trivial manner (see Figure 4.10). The storage requirements of a sparse-optimised version of GT algorithm scale linearly with the number of edges.

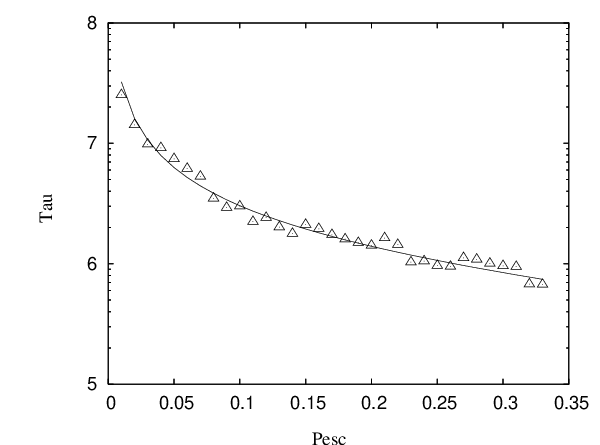

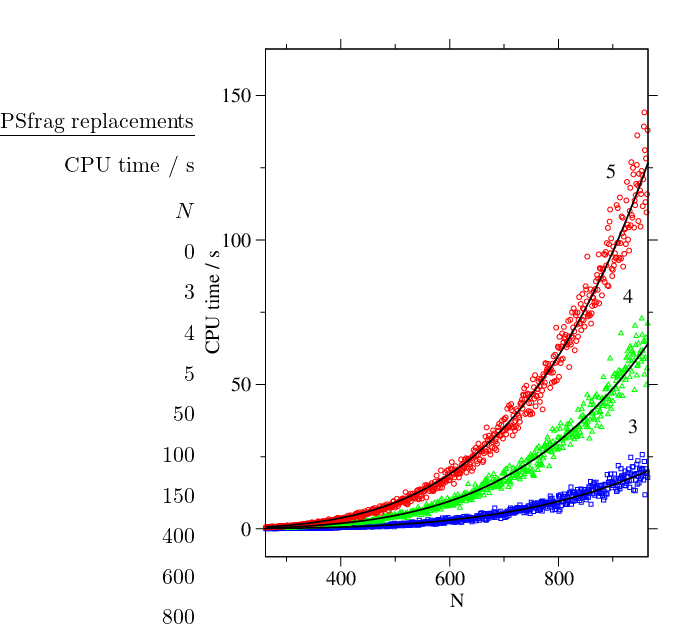

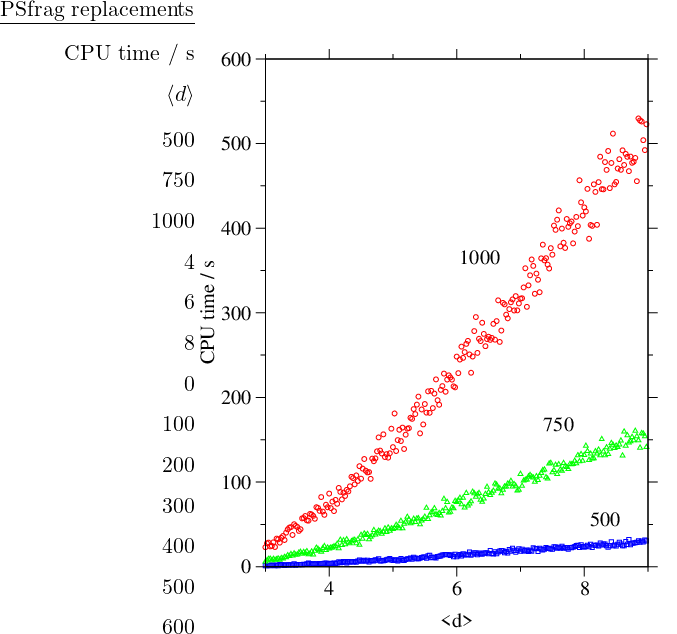

To investigate the dependence of the cost of the GT method on the number of nodes, , we have tested it on a series of random graphs for different values of and fixed average degree, . The results for three different values of are shown in Figure 4.11. The motivation for choosing from the interval was the fact that most of our stationary point databases have average connectivities for the local minima that fall into this range.

It can be seen from Figure 4.11 that for sparse random graphs the cost scales as with a small -dependent prefactor. The dependence of the computational complexity on is illustrated in Figure 4.12.

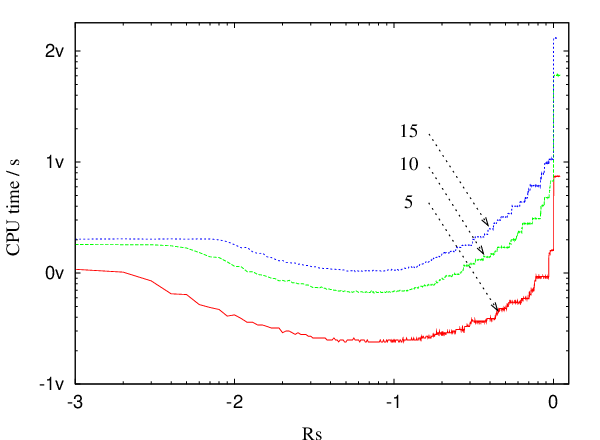

From Figure 4.10 it is apparent that at some point during the execution of the GT algorithm the graph reaches its maximum possible density. Once the graph is close to complete it is no longer efficient to employ a sparse-optimised algorithm. The most efficient approach we have found for sparse graphs is to use the sparse-optimised GT algorithm until the graph is dense enough, and then switch to Algorithm B.3. We will refer to this approach as SDGT. The change of data structures constitutes a negligible fraction of the total execution time. Figure 4.13 depicts the dependence of the CPU time as a function of the switching parameter .

Whenever the ratio , where the is the degree of intermediate node detached at iteration , and is the number of the nodes on a heap at iteration , is greater than , the partially transformed graph is converted from the adjacency list format into adjacency matrix format and the transformation is continued using Algorithm B.3. It can be seen from Figure 4.10 that for the case of a random graphs with a single sink, a single source and 999 intermediate nodes the optimal values of lie in the interval .

We now return to the digraph representation of a Markov chain that corresponds to the DPS pathway ensemble discussed in Section 4.1.4. A problem with (partially) overlapping sets of sources and sinks can easily be converted into an equivalent problem where there is no overlap, and then the GT method discussed in Section 4.4 and Section 4.5 can be applied as normal.

As discussed above, solving a problem with sources reduces to solving single-source problems. We can therefore explain how to deal with a problem of overlapping sets of sinks and sources for a simple example where there is a single source-sink and, optionally, a number of sink nodes.

First, a new node is added to the set of sinks and its adjacency lists are initialised to and . Then, for every node we update its waiting time as and add node to the set of sources with probabilistic weight initialised to , where is the original probabilistic weight of source (the probability of choosing source from the set of sources). Afterwards, the node is deleted.

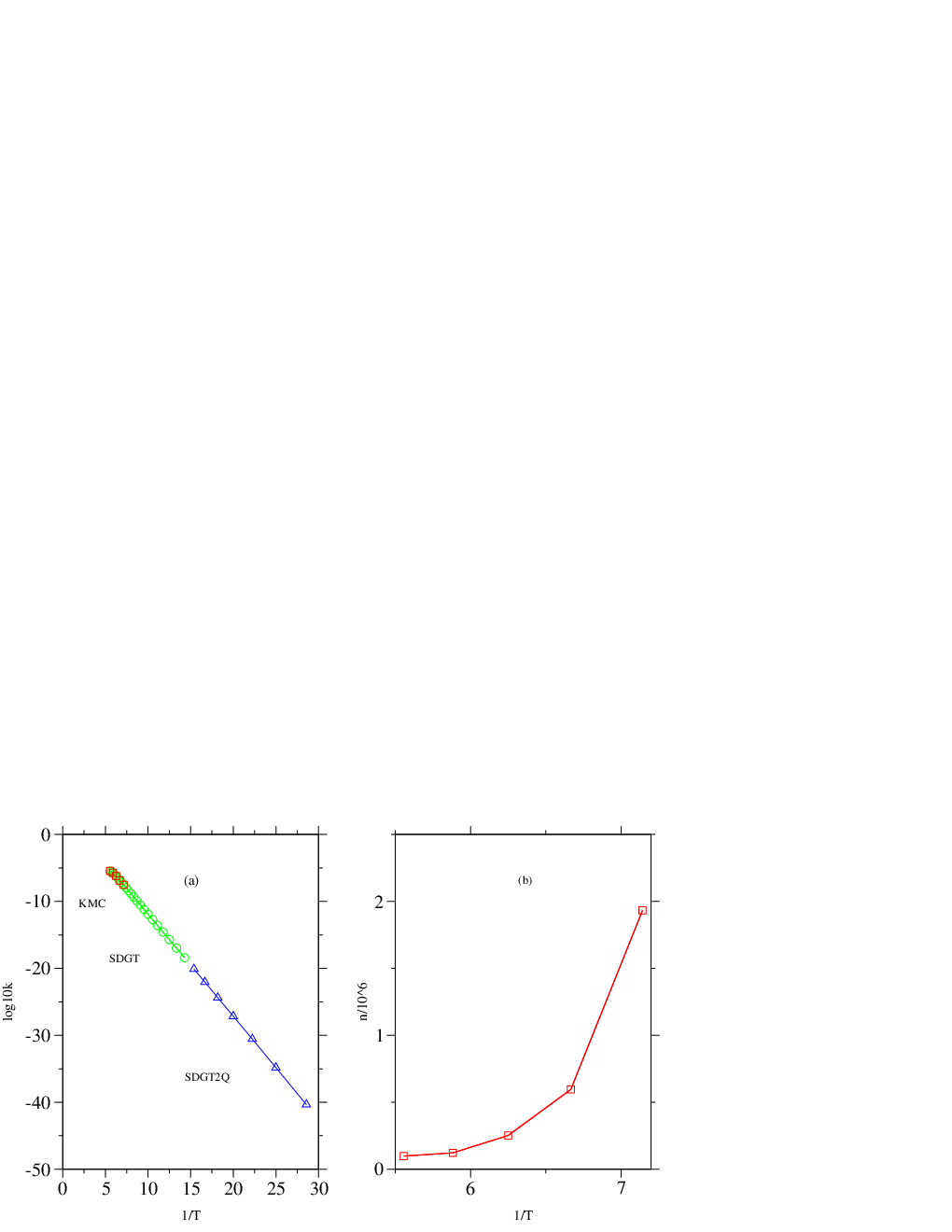

We have applied the GT method to study the temperature dependence of the rate of interconversion of 38-atom Lennard-Jones cluster. The PES sample was taken from a previous study [8] and contained 1740 minima and 2072 transition states. Only geometrically distinct structures were considered when generating this sample because this way the dimensionality of the problem is reduced approximately by a factor of , where is the order of the point group. Initial and final states in this sample roughly correspond to icosahedral-like and octahedral-like structures on the PES of this cluster. The assignment was made in Reference [8] by solving master equation numerically to find the eigenvector that corresponds to the smallest non-zero eigenvalue. As simple two-state dynamics are associated with exponential rise and decay of occupation probabilities there must exist a time scale on which all the exponential contributions to the solution of the master equation decay to zero except for the slowest [9]. The sign of the components of the eigenvector corresponding to the slowest mode was used to classify the minima as or in character [8].

The above sample was pruned to ensure that every minimum is reachable from any other minimum to create a digraph representation that contained 759 nodes including 43 source nodes and 5 sink nodes, and 2639 edges. The minimal, average and maximal degree for this graph were 2, and 84, respectively, and the graph density was . We have used the SDGT algorithm with the switching ratio set to to transform this graph for several values of temperature. In each of these calculations 622 out of 711 intermediate nodes were detached using SGT, and the remaining 89 intermediate nodes were detached using the GT algorithm optimised for dense graphs (DGT).

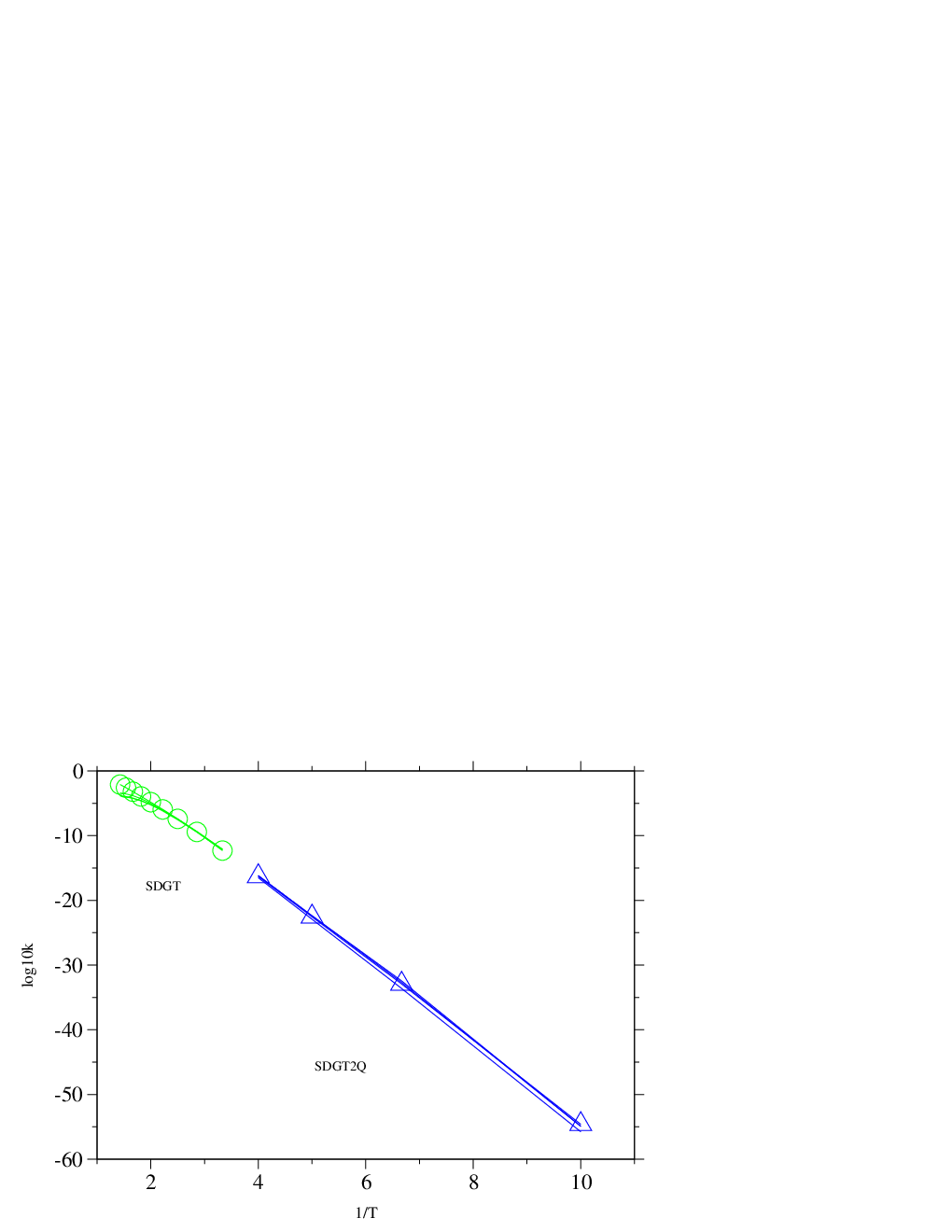

An Arrhenius plot depicting the dependence of the rate constant on temperature is shown in Figure 4.14 (a). The running time of SDGT algorithm was seconds [this value was obtained by averaging over 10 runs and was the same for each SDGT run in Figure 4.14 (a)]. For comparison, the timings obtained using the SGT and DGT algorithms for the same problem were and seconds, respectively. None of the 43 total escape probabilities (one for every source) deviated from unity by more than for temperatures above (reduced units). For lower temperatures the probability was not conserved due to numerical imprecision.

The data obtained using SDGT method is compared with results from KMC simulation, which require increasingly more CPU time as the temperature is lowered. Figure 4.14 also shows the data for KMC simulations at temperatures , , , and . Each KMC simulation consisted of the generation of an ensemble of 1000 KMC trajectories from which the averages were computed. The cost of each KMC calculation is proportional to the average trajectory length, which is depicted in Figure 4.14 (b) as a function of the inverse temperature. The CPU timings for each of these calculations were (in the order of increasing temperature, averaged over five randomly seeded KMC simulations): , , , , and seconds. It can be seen that using GT method we were able to obtain kinetic data for a wider range of temperatures and with less computational expense.

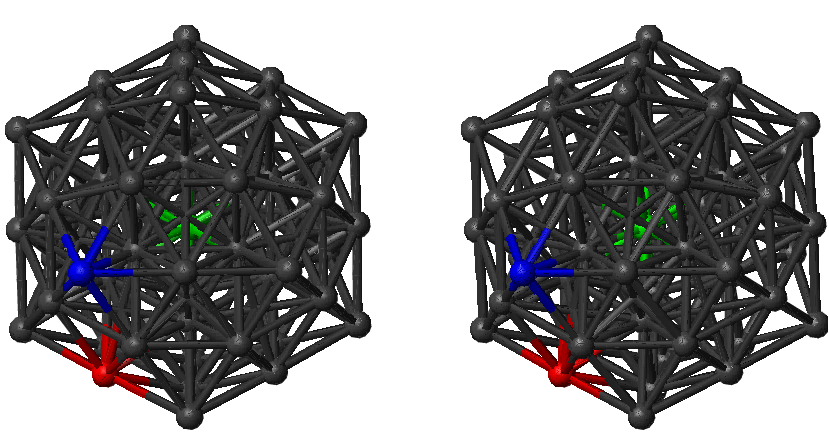

We have applied the graph transformation method to study the centre-to-surface atom migration in 55-atom Lennard-Jones cluster. The global potential energy minimum for LJ is a Mackay icosahedron, which exhibits special stability and ‘magic number’ properties [279, 280]. Centre-to-surface and surface-to-centre rates of migration of a tagged atom for this system were considered in previous work [10]. In Reference [10] the standard DPS procedure was applied to create and converge an ensemble of paths linking the structure of the global minimum with the tagged atom occupying the central position and structures where tagged atom is placed in sites that lie on fivefold and twofold symmetry axes (Figure 4.15). We have reused this sample in the present work.

The sample contained 9907 minima and 19384 transition states. We excluded transition states that facilitate degenerate rearrangements from consideration. For minima interconnected by more than one transition state we added the rate constants in each direction to calculate the branching probabilities. Four digraph representations were created with minimum degrees of 1, 2, 3 and 4 via iterative removal of the nodes with degrees that did not satisfy the requirement. These digraphs will be referred to as digraphs 1, 2, 3 and 4, respectively. The corresponding parameters are summarised in Table 4.1. Since the cost of the GT method does not depend on temperature we also quote CPU timings for the DGT, SGT and SDGT methods for each of these graphs in the last three columns of Table 4.1. Each digraph contained two source nodes labelled and and a single sink. The sink node corresponds to the global minimum with the tagged atom in the centre (Figure 4.15). It is noteworthy that the densities of the graphs corresponding to both our samples (LJ and LJ) are significantly lower than the values predicted for a complete sample [115], which makes the use of sparse-optimised methods even more advantageous. From Table 4.1 it is clear that the SDGT approach is the fastest, as expected; we will use SDGT for all the rate calculations in the rest of this section.

1 3. 9 983 3. 6 10 5. 71 20 2346. 1 39. 6 1. 36 2 4. 8 983 6. 5 9 4. 86 17 1016. 1 38. 9 1. 33 3 7. 9 873 29. 5 4 3. 63 8 46. 9 5. 9 0. 49 4 1. 9 680 101. 0 4 3. 07 7 3. 1 0. 8 0. 12

For this sample KMC calculations are unfeasible at temperatures lower than about (Here is expressed in the units of ). Already for the average KMC trajectory length is (value obtained by averaging over 100 trajectories). In previous work it was therefore necessary to use the DPS formalism, which invokes a steady-state approximation for the intervening minima, to calculate the rate constant at temperatures below [10]. Here we report results that are in direct correspondence with the KMC formulation of the problem, for temperatures as low as .

Figure 4.16 presents Arrhenius plots that we calculated using the SDGT method for this system. The points in the green dataset are the results from seven SDGT calculations at temperatures conducted for each of the digraphs. The total escape probabilities, and , calculated for each of the two sources at the end of the calculation deviated from unity by no more than . For higher temperatures and smaller digraphs the deviation was smaller, being on the order of for digraph 4 at , and improving at higher temperatures and/or smaller graph sizes.

At temperatures lower than the probability deviated by more than due to numerical imprecision. This problem was partially caused by the round-off errors in evaluation of terms , which increase when approaches unity. These errors can propagate and amplify as the evaluation proceeds. By writing

| (4.44) |

and then using

| (4.45) |

we were able to decrease by several orders of magnitude at the expense of doubling the computational cost. The SDGT method with probability denominators evaluated in this fashion will be referred to as SDGT1.

Terms of the form approach zero when nodes and become ‘effectively’ (i.e. within available precision) disconnected from the rest of the graph. If this condition is encountered in the intermediate stages of the calculation it could also mean that a larger subgraph of the original graph is effectively disconnected. The waiting time for escape if started from a node that belongs to this subgraph tends to infinity. If the probability of getting to such a node from any of the source nodes is close to zero the final answer may still fit into available precision, even though some of the intermediate values cannot. Obtaining the final answer in such cases can be problematic as division-by-zero exceptions may occur.

Another way to alleviate numerical problems at low temperatures is to stop round-off errors from propagation at early stages by renormalising the branching probabilities of affected nodes after node is detached. The corresponding check that the updated probabilities of node add up to unity could be inserted after line 33 of Algorithm B.4 (see Appendix B), and similarly for Algorithm B.3. A version of SDGT method with this modification will be referred to as SDGT2.

Both SDGT1 and SDGT2 have similarly scaling overheads relative to the SDGT method. We did not find any evidence for superiority of one scheme over another. For example, the SDGT calculation performed for digraph 4 at yielded , and precision was lost as both and were less than . The SDGT1 calculation resulted in and , while the SDGT2 calculation produced with . The CPU time required to transform this graph using our implementations of the SDGT1 and SDGT2 methods was and seconds, respectively.

To calculate the rates at temperatures in the interval reliably we used an implementation of the SDGT2 method compiled with quadruple precision (SDGT2Q) (note that the architecture is the same as in other benchmarks, i.e. with 32 bit wide registers). The points in the blue dataset in Figure 4.16 are the results from 4 SDGT2Q calculations at temperatures .

By using SDGT2Q we were also able to improve on the low-temperature results for LJ presented in the previous section. The corresponding data is shown in blue in Figure 4.14.

The most important result of this chapter is probably the graph transformation (GT) method. The method works with a digraph representation of a Markov chain and can be used to calculate the first moment of a distribution of the first-passage times, as well as the total transition probabilities for an arbitrary digraph with sets of sources and sinks that can overlap. The calculation is performed in a non-iterative and non-stochastic manner, and the number of operations is independent of the simulation temperature.

We have presented three implementations of the GT algorithm: sparse-optimised (SGT), dense-optimised (DGT), and hybrid (SDGT), which is a combination of the first two. The SGT method uses a Fibonacci heap min-priority queue to determine the order in which the intermediate nodes are detached to achieve slower growth of the graph density and, consequently, better performance. SDGT is identical to DGT if the graph is dense. For sparse graphs SDGT performs better then SGT because it switches to DGT when the density of a graph being transformed approaches the maximum. We find that for SDGT method performs well both for sparse and dense graphs. The worst case asymptotic scaling of SDGT is cubic.

We have also suggested two versions of the SDGT method that can be used in calculations where a greater degree of precision is required. The code that implements SGT, DGT, SDGT, SDGT1 and SDGT2 methods is available for download [274].

The connection between the DPS and KMC approaches was discussed in terms of digraph representations of Markov chains. We showed that rate constants obtained using the KMC or DPS methods can be computed using graph transformation. We have presented applications to the isomerisation of the LJ cluster and the internal diffusion in the LJ cluster. Using the GT method we were able to calculate rate constants at lower temperatures than was previously possible, and with less computational expense.

We also obtained analytic expressions for the total transition probabilities for arbitrary digraphs in terms of combinatorial sums over pathway ensembles. It is hoped that these results will help in further theoretical pursuits, e.g. these aimed at obtaining higher moments of the distribution of the first passage times for arbitrary Markov chains.

Finally, we showed that the recrossing contribution to the DPS rate constant of a given discrete pathway can be calculated exactly. We presented a comparison between a sparse-optimised matrix multiplication method and a sparse-optimised version of Algorithm B.1 and showed that it is beneficial to use Algorithm B.1 because it is many orders of magnitude faster, runs in linear time and has constant memory requirements.